Introduction to turbulence/Statistical analysis/Probability

From CFD-Wiki

(→The probability distribution) |

(→The probability distribution) |

||

| Line 96: | Line 96: | ||

Sometimes it is convienient to work with the '''probability distribution''' instead of with probability density function. The probability distribution is defined as the probability that the random variable has a value less than or equal to a given value. Thus from equation 2.15, the probability distribution is given by | Sometimes it is convienient to work with the '''probability distribution''' instead of with probability density function. The probability distribution is defined as the probability that the random variable has a value less than or equal to a given value. Thus from equation 2.15, the probability distribution is given by | ||

| + | |||

| + | <table width="100%"><tr><td> | ||

| + | :<math> | ||

| + | F_{x} \left( c \right) = Prob \left\{ x < c \right\} = \int^{c}_{-\infty} B_{x} \left( c^{'} \right) d c^{'} | ||

| + | </math> | ||

| + | </td><td width="5%">(2)</td></tr></table> | ||

=== Gaussian (or normal) distributions === | === Gaussian (or normal) distributions === | ||

Revision as of 13:08, 30 May 2006

Contents |

Probability

The histogram and probability density function

The frequency of occurence of a given amplitude (or value) from a finite number of realizations of a random variable can be displayed by dividing the range of possible values of the random variables into a number of slots (or windows). Since all possible values are covered, each realization fits into only one window. For every realization a count is entered into the appropriate window. When all the realizations have been considered, the number of counts in each window is divided by the total number of realizations. The result is called the histogram (or frequency of occurence diagram). From the definitioin it follows immediately that the sum of the values of all the windows is exactly one.

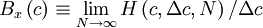

The shape of a histogram depends on the statistical distribution of the random variable, but it also depends on the total number of realizations, N, and the size of the slots,  . THe histogram can be represented symbolically by the function

. THe histogram can be represented symbolically by the function  where

where  ,

,  is the slot width, and

is the slot width, and  is the number of realizaions of the random variable. Thus the histogram shows the relative frequency of occurence of a given value range in a given ensemble. Figure 2.3 illustrates a typical histogram. If the size of the sample is increased so that the number of realizations in each window increases, the diagram will become less erratic and will be more representative of the actual probability of occurence of the amplitudes of the signal itself, as long as the window size is sufficiently small.

is the number of realizaions of the random variable. Thus the histogram shows the relative frequency of occurence of a given value range in a given ensemble. Figure 2.3 illustrates a typical histogram. If the size of the sample is increased so that the number of realizations in each window increases, the diagram will become less erratic and will be more representative of the actual probability of occurence of the amplitudes of the signal itself, as long as the window size is sufficiently small.

If the number of realizations,  , increases without bound as the window size,

, increases without bound as the window size,  , goes to zero, the histogram divided by the window size goes to a limiting curve called the probability density function,

, goes to zero, the histogram divided by the window size goes to a limiting curve called the probability density function,  . That is,

. That is,

|

| (2) |

Note that as the window width goes to zero, so does the number of realizations which fall into it,  . That it is only when this number (or relative number) is divided by the slot width that a meaningful limit is achieved.

. That it is only when this number (or relative number) is divided by the slot width that a meaningful limit is achieved.

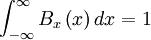

The probability density function (or pdf) has the following propeties:

- Property 1:

|

| (2) |

always.

- Property 2:

|

| (2) |

where  is read "the probability that".

is read "the probability that".

- Property 3:

|

| (2) |

- Property 4:

|

| (2) |

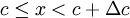

The condition imposed by property (1) simply states that negative probabilities are impossible, while property (4) assures that the probability is unity that a realization takes on some value. Property (2) gives the probability of finding the realization in a interval around a certain value, while property (3) provides the probability that the realization is less than a prescribed value. Note the necessity of distinguishing between the running variable,  , and the integration variable,

, and the integration variable,  , in equations 2.14 and 2.15.

, in equations 2.14 and 2.15.

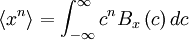

Since  gives the probability of the random variable

gives the probability of the random variable  assuming a value between

assuming a value between  and

and  , any moment of the distribution can be computed by integrating the appropriate power of

, any moment of the distribution can be computed by integrating the appropriate power of  over all possible values. Thus the

over all possible values. Thus the  - th moment is given by:

- th moment is given by:

|

| (2) |

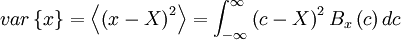

If the probability density is given, the moments of all orders can be determined. For example, the variance can be determined by:

|

| (2) |

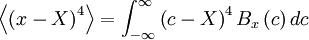

The central moments give information about the shape of the probability density function, and vice versa. Figure 2.4 shows three distributions which have the same mean and standard deviation, but are clearly quite different. Beneath them are shown random functions of time, which might have generated them. Distribution (b) has a higher value of the fourth central moment than does distribution (a). This can be easily seen from the definition

|

| (2) |

since the fourth power emphasizes the fact that distribution (b) has more weight in the tails than does distribution (a).

It is also easy to see that because of the symmetry of pdf's in (a) and (b) all the odd central moments will be zero. Distributions (c) and (d), on the other hand, have non-zero values for the odd moments, because of their asymmtry. For example,

|

| (2) |

is equal to zero if B_{x} is an even function.

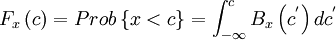

The probability distribution

Sometimes it is convienient to work with the probability distribution instead of with probability density function. The probability distribution is defined as the probability that the random variable has a value less than or equal to a given value. Thus from equation 2.15, the probability distribution is given by

|

| (2) |

Gaussian (or normal) distributions

fdgdsgadfg